Spectral Attention Steering for Prompt Highlighting

1月 27, 2026· ,,,,,·

1 分钟阅读时长

,,,,,·

1 分钟阅读时长

Weixian Waylon Li

Yuchen Niu

Yongxin Yang

Keshuang Li

Tiejun Ma

Shay B. Cohen

摘要

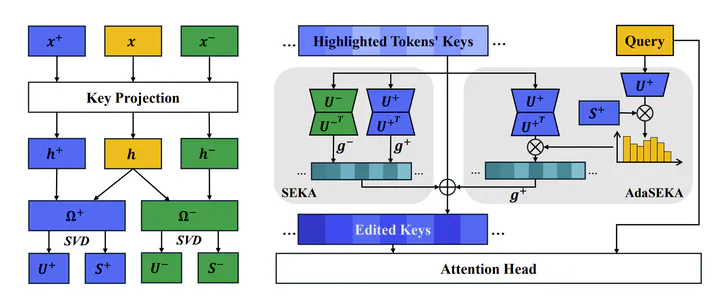

Steering a large language model’s attention towards user-specified highlighted text is a critical capability. Existing prompt highlighting methods are incompatible with modern efficient attention mechanisms like Flash Attention due to their reliance on post-hoc matrix editing. We introduce Spectral Editing Key Amplification (SEKA), a training-free steering method that tackles this by directly editing key embeddings before attention computation. SEKA learns universal relevance subspaces offline via spectral decomposition. We extend this to Adaptive SEKA (AdaSEKA), a query-adaptive variant that uses a training-free routing mechanism to dynamically combine multiple expert subspaces based on the prompt’s semantic intent. Our experiments show both methods significantly outperform strong baselines on standard steering benchmarks while adding much lower latency and memory overhead, ensuring full compatibility with optimised attention.

类型

出版物

Accepted to The Fourteenth International Conference on Learning Representations (ICLR) 2026

Citation

@inproceedings{

li-2026spectral,

title={Spectral Attention Steering for Prompt Highlighting},

author={Li, Weixian Waylon and Niu, Yuchen and Yang, Yongxin and Li, Keshuang and Ma, Tiejun and Cohen, Shay B.},

booktitle={The Fourteenth International Conference on Learning Representations},

year={2026},

url={https://openreview.net/forum?id=XfLvGIFmAN}

}